curated news excerpts & citations

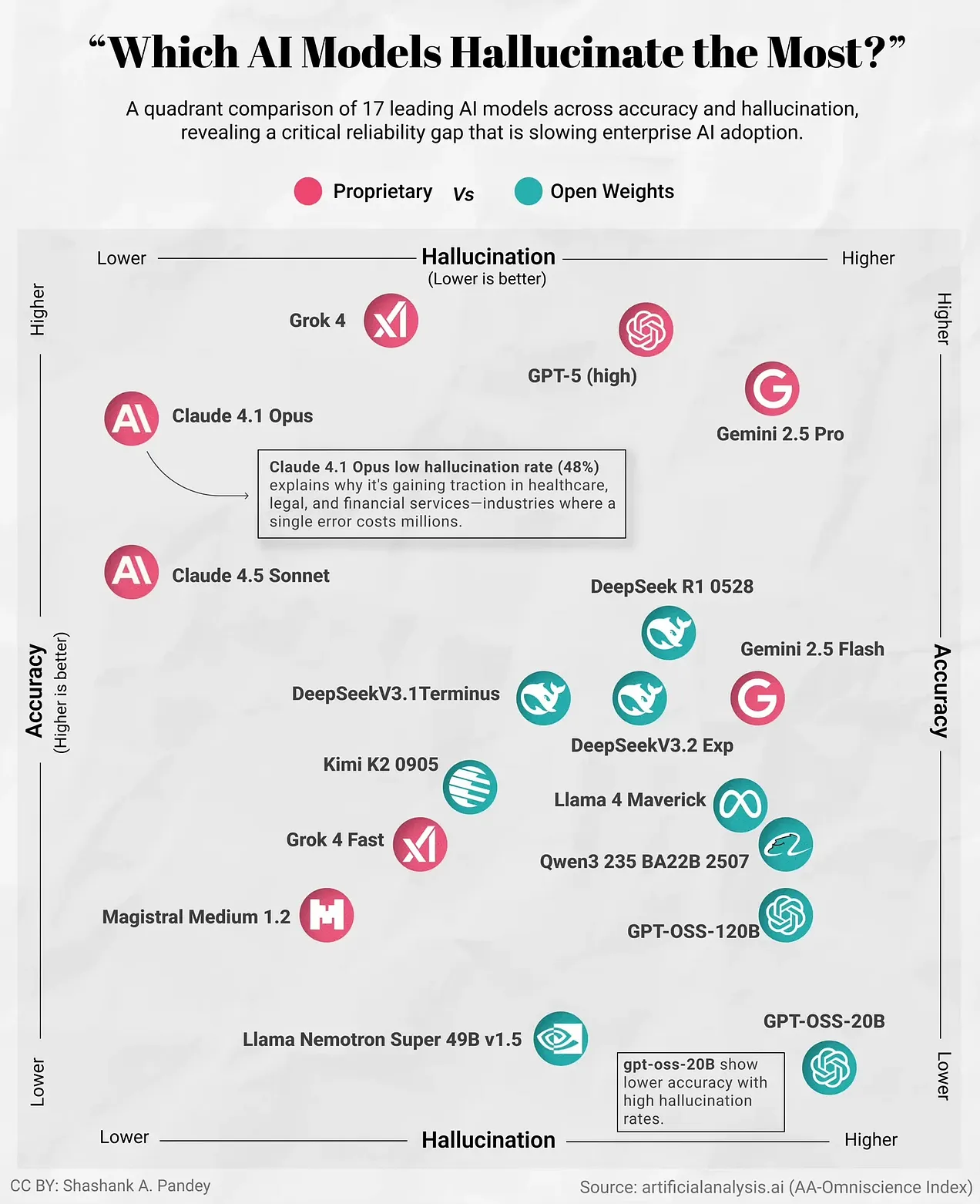

James Eagle: Hallucination is now the real AI bottleneck

This matters because the usefulness of AI now hinges less on how clever models sound and more on whether they can be trusted. As AI moves from demos into healthcare, law and finance, a single wrong answer is no longer a curiosity. It is a liability.

This chart compares leading AI models across two dimensions accuracy and hallucination. Lower hallucination is better. What stands out is how wide the gap has become. Some of the most capable models also hallucinate more often, while others sacrifice a bit of raw performance to stay grounded. Claude models cluster toward lower hallucination, which helps explain their traction in regulated industries. Several open weight models sit at the opposite extreme, offering flexibility and cost advantages but with far higher error risk.

The deeper point is that scale and fluency are no longer enough. Bigger models trained on more data do not automatically become more reliable. In fact, as systems grow more confident and articulate, their mistakes can become harder to spot. That creates a trust problem, not a technology problem. Until hallucination rates fall meaningfully, AI adoption in high stakes settings will remain cautious and uneven.

(James Eagle more…)

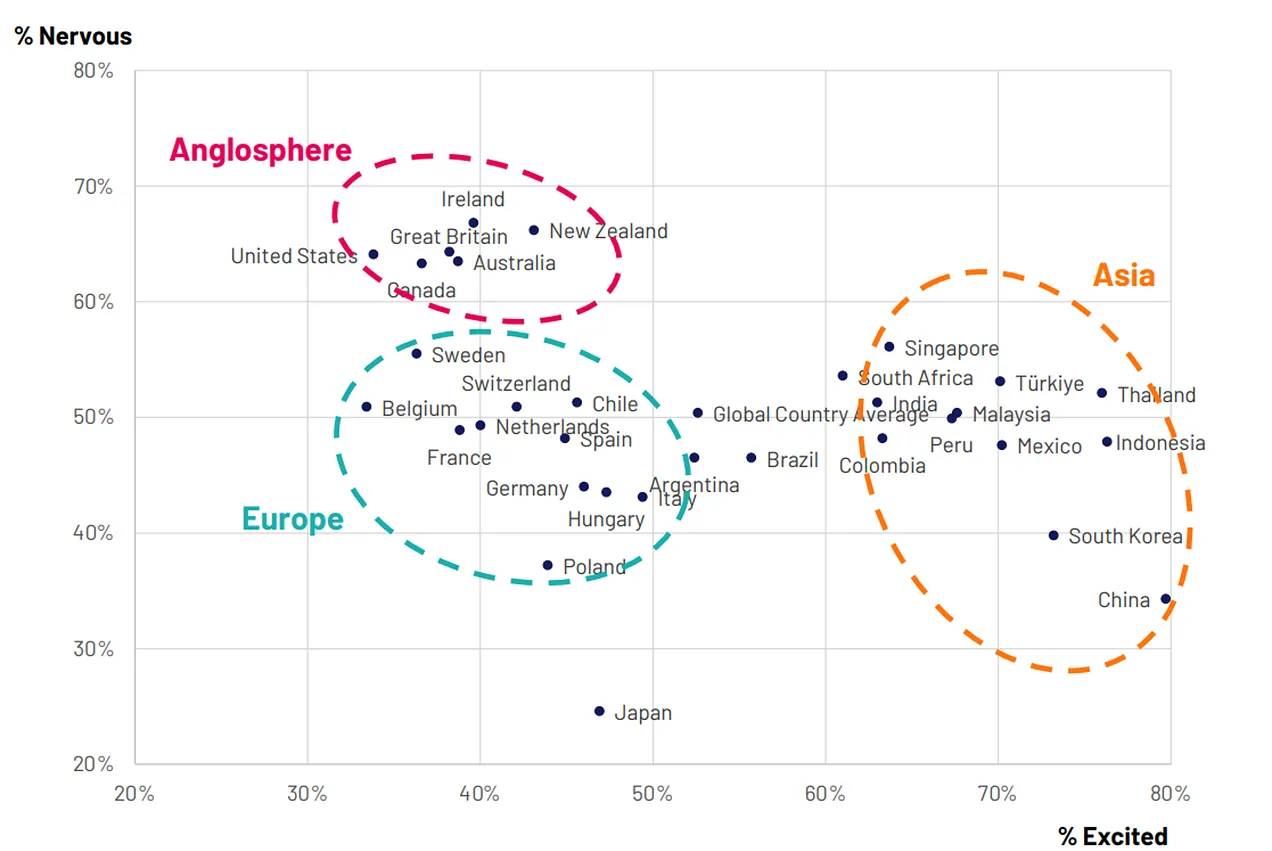

Howard Yu: The One Chart That Explains Everything

If one chart captures the global mood as the holidays arrive, it isn’t a stock index, an inflation line, or a defense budget.

On the X-axis, you have excitement; on the Y-axis, nervousness. The world splits into two distinct clusters.

You have the United States and much of Western Europe in one cluster: wary, anxious, bracing for impact. In the other, China and its Asian neighbors: optimistic, eager, racing to adopt.

(Howard Yu more…)

-

Guardian: US economy grew strongly in third quarter, GDP report says

…

The US economy has demonstrated resilience in a year of extraordinary challenges. Trump announced sweeping tariffs in April on the US’s major trading partners and while he has watered down or rolled back many of the levies, the uncertainty they have caused has rattled businesses and consumers.The US economy contracted in the first quarter of 2025 as businesses tried to get ahead of Trump’s threatened tariffs with an unprecedented surge in imports. But GDP growth soon recovered, spurred on by massive investment in artificial intelligence and robust consumer spending.

In a note to investors, Paul Ashworth, chief North America economist at Capital Economics, wrote: “The economy maintains considerable momentum. That said, the shutdown could trigger a slowdown in the fourth quarter to nearer 2% annualised.”

(Guardian more…)

-

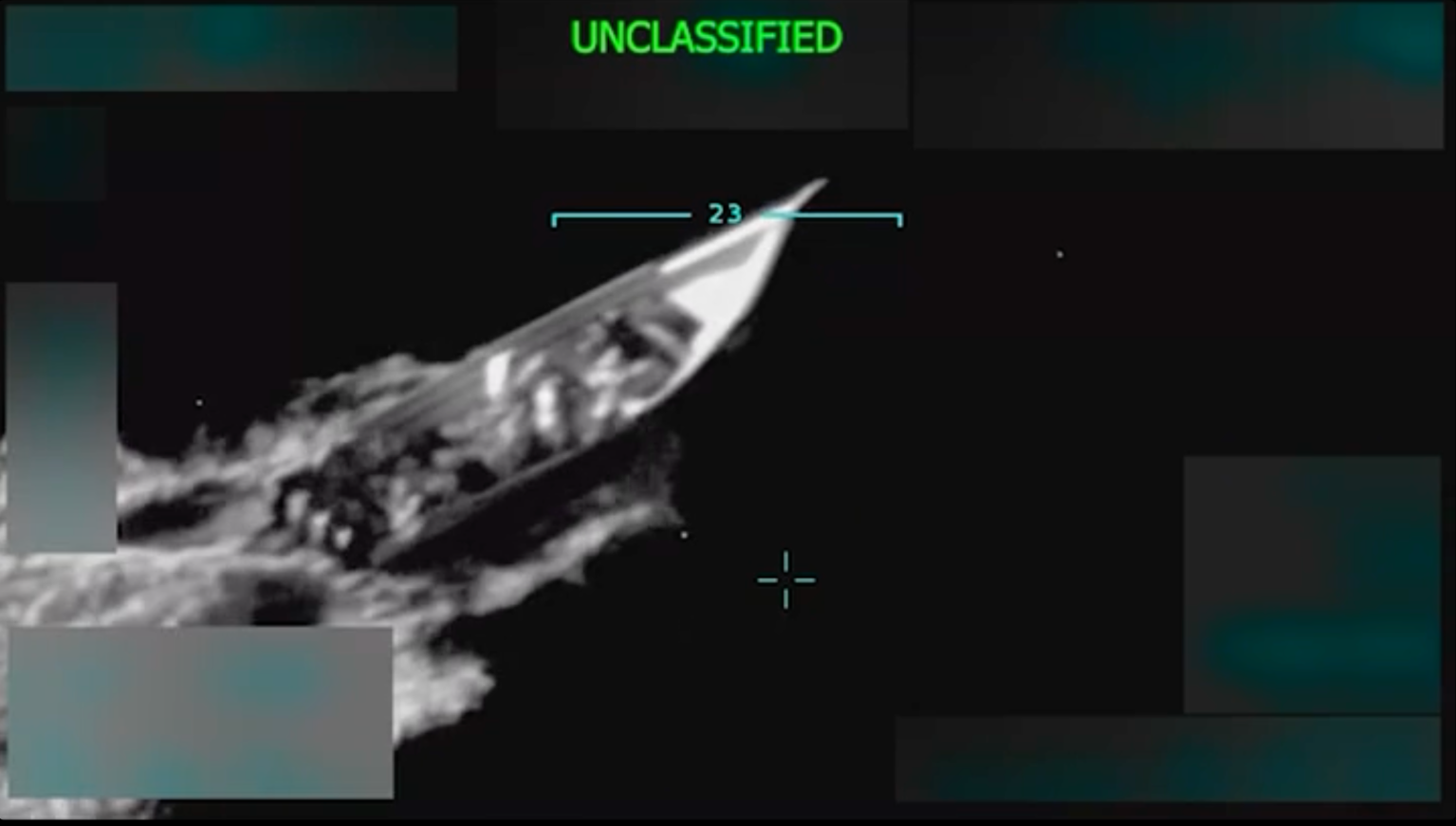

Intercept: U.S. Military Killed Boat Strike Survivors for Not Surrendering Correctly

Before ordering a second strike on their boat, Adm. Frank Bradley sought legal advice from JSOC’s top lawyer, Col. Cara Hamaguchi, The Intercept has learned.

(Intercept more…)

-

SCOTUSblog: Supreme Court rejects Trump’s effort to deploy National Guard in Illinois

The Supreme Court on Tuesday left in place a ruling by a federal judge in Chicago that bars the Trump administration from deploying National Guard troops in Illinois. In a three-page unsigned order, the justices turned down the government’s request to put the temporary restraining order issued by U.S. District Judge April Perry on Oct. 9 on hold while litigation continues in the lower courts. “At this preliminary stage,” the court said, “the Government has failed to identify a source of authority that would allow the military to execute the laws in Illinois.” It was the second loss for the Trump administration before the court in only four days.

Three justices dissented from Tuesday’s order. Justice Samuel Alito, in a 16-page decision joined by Justice Clarence Thomas, wrote that “[w]hatever one may think about the current administration’s enforcement of the immigration laws or the way ICE has conducted its operations, the protection of federal officers from potentially lethal attacks should not be thwarted.”

Justice Neil Gorsuch indicated that he too would have granted the government’s request.

(SCOTUSblog more…)

-

Jess Piper: Not That Kind of White

White supremacy

Qasim Rashid, Esq.: December 24 is the 160th Anniversary of the KKK’s Founding

-

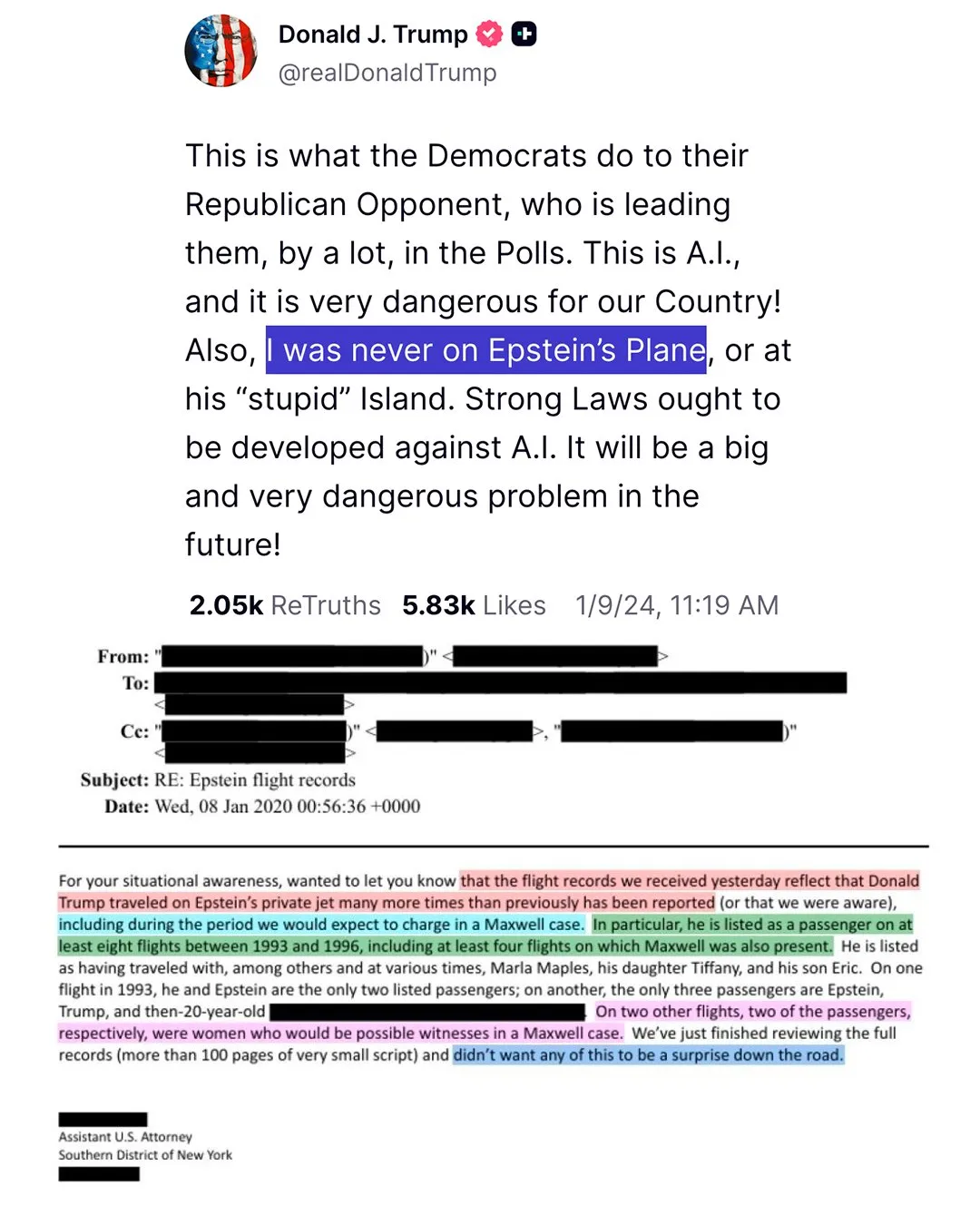

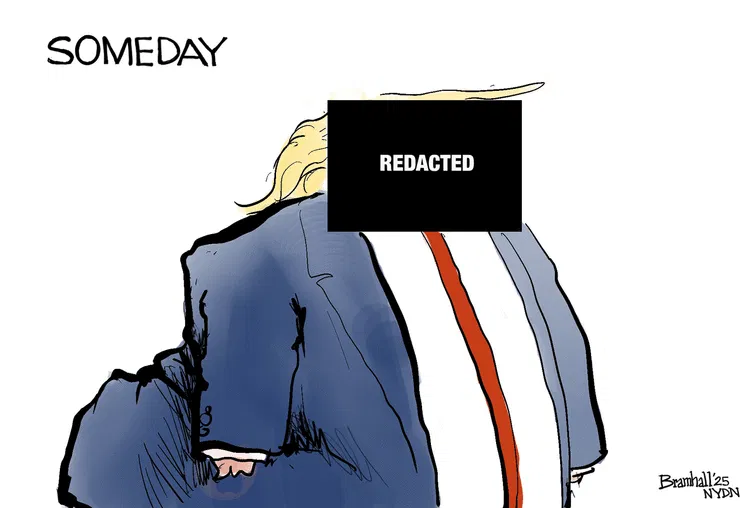

Reuters: Trump flew on Epstein jet eight times in the ’90s, according to prosecutor email

- Email in Epstein files says Trump flew on private jet eight times in 1990s

- Justice Department says some documents contain unfounded allegations against Trump

- Government releases nearly 30,000 pages of additional records

Guardian: Some Epstein file redactions are being undone with hacks

Un-redacted text from released documents began circulating on social media on Monday evening

People examining documents released by the Department of Justice in the Jeffrey Epstein case discovered that some of the file redaction can be undone with Photoshop techniques, or by simply highlighting text to paste into a word processing file.

Raw Story: ‘Took me into a fancy hotel’: FBI received explosive tip about Trump and Epstein

Raw Story: Trump’s DOJ declined to prosecute ’10 co-conspirators’: Epstein documents

Brian Allen: A Purported Epstein Letter to Larry Nassar, Handwriting Review, and a Vanishing Record

AXIOS: Trump administration expects Epstein files release could last another week

-

Heather Cox Richardson: Letters from an American – December 23, 2025

On December 24, 2025, the North American Aerospace Defense Command, or NORAD, will celebrate seventy years of tracking Santa’s sleigh.

(Heather Cox Richardson more…)

GriftMatrix

Trump Action Tracker

Timeline: Tracking the Trump Justice Department’s Anti-Voting Shift

Tracking the Lawsuits Against Trump’s Agenda

Trump Pardons Database

Project 2025 Tracker

DOGE Tracker

ProPublica: Elon Musk’s Demolition Crew

Wired: 6 Tools for Tracking the Trump Administration’s Attacks on Civil Liberties